|

|

|

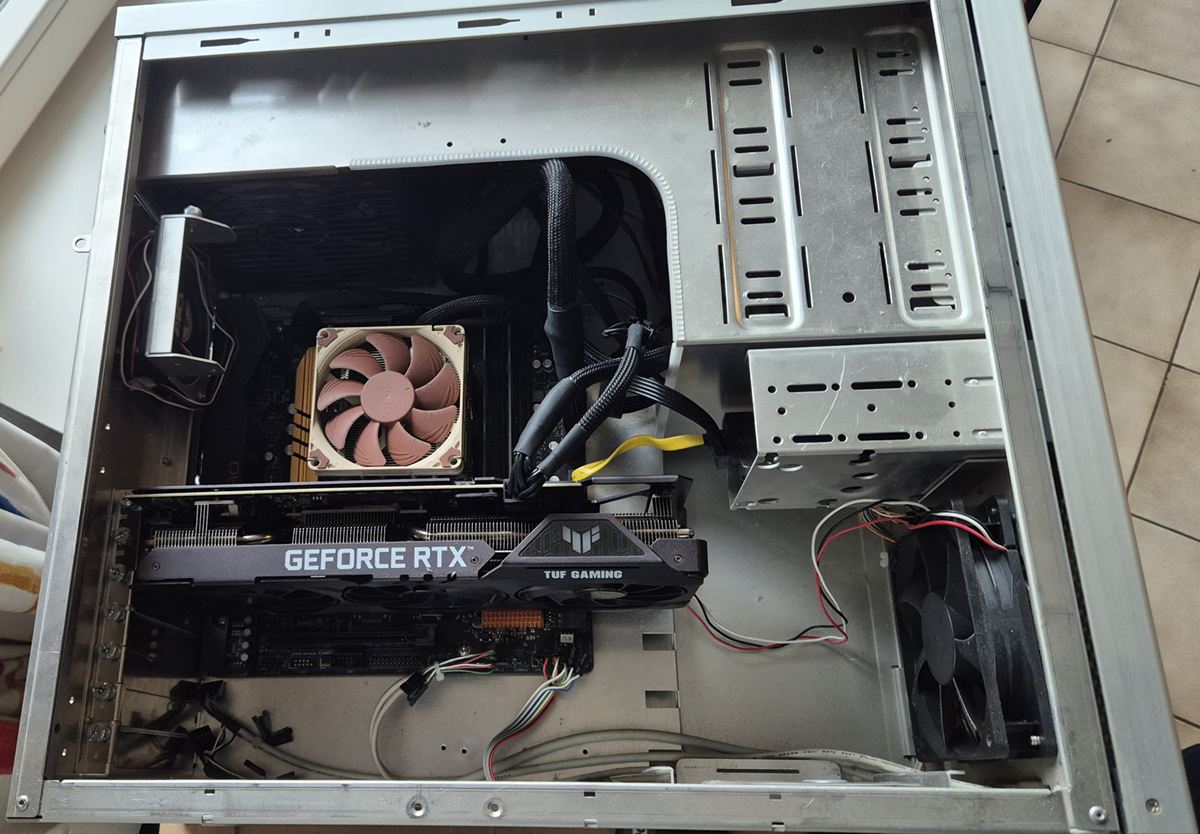

Dedicated HTPC running windows 10 with Kodi/DsPlayer 17.7 and MadVR :

This PC is purely used as a mediaplayer.

It cannot connect to the internet (iptables firewall drop all), so it is fine on Windows 10.

| Mainboard : Asrock H170M Pro4 rev 1.02, socket 1151, 2 PCIe 3.0 x16, 2 PCIe 3.0 x1 6x SATA3, 1x Ultra M.2 ((PCIe Gen3 x4 & SATA3), 8x USB3.0, Intel i219 GB lan Graphics Output Options: D-Sub, DVI-D, HDMI, DisplayPort 1.2 - 2 x PCI Express 3.0 x16 Slots (PCIE1: x16 mode; PCIE4: x4 mode) - 2 x PCI Express 3.0 x1 Slots (Flexible PCIe) |

|

Intel I3-7100@3900Hz Kaby Lake, 3MB L2 cache Socket 1151, 2 core, 4 threads, TDP 51W Intelฎ HD Graphics 630, DirectX 12. Resolution : 4k @60Hz |

|

16GB : 2x 8GB Crucial CT8G4DFS824A DDR4 RAM DDR4 PC4-19200 CL=17 Single Ranked x8 based Unbuffered NON-ECC DDR4-2400 1.2V 1024Meg x 64 |

|

Noctua NH-L9x65 Semi-Low profile CPU cooler |  |

| Crucial M4 SSD 250GB |  |

Corsair RM550x 550Watt Semi passive, 80plus Gold certified power supply |

|

| Lian Li PC60 PlusII case |  |

ASUS TUF Gaming GeForce RTX 3060 Ti V2 OC Edition

Max. 1,785GHz 8GB GDDR6 14 Gbps 256bit 3x DP, 2x HDMI 2.1 4.864 Cores, PCI-e 4.0 x16

|

|

The mediaplayer is Kodi 17.7 with DSPlayer integrated. It uses Direct X11 with MadVR as renderer. MadVR is known for its excellent HDR to SDR mapping capabilities.

It is used in semi professional products like the MadVR Envy scaler/PC solution.

Every frame that is read from the source is transferred to the GPU for scaling, rendering and mapping calculations.

Since film/movies have 24fps (23.976 actually) a new frame comes every 41ms. The calculations need to be finished well before the 41ms time. After that the frame is transferred from CPU to GPU for displaying.

Therefore a strong GPU like the RTX3060ti is needed. There are many "quality" options that can be enabled/set if the GPU can handle it.

For the CPU a simple i3 is sufficient. It is not even loaded more then 15% during playback.

The previous RTX2060 was taking almost 38ms per frame, and I had to disable some "options". Also it was producing audible fan noise.

The current setup with RTX3060Ti takes UHD source 24 fps with HDR and has total render time of about 22ms and I do not have to compromise on quality.

Buying an even faster GPU makes no sense because if the rendering would be finished in 10ms then the PC would just wait 30ms before the next 41ms frame would come.

It is used in semi professional products like the MadVR Envy scaler/PC solution.

Every frame that is read from the source is transferred to the GPU for scaling, rendering and mapping calculations.

Since film/movies have 24fps (23.976 actually) a new frame comes every 41ms. The calculations need to be finished well before the 41ms time. After that the frame is transferred from CPU to GPU for displaying.

Therefore a strong GPU like the RTX3060ti is needed. There are many "quality" options that can be enabled/set if the GPU can handle it.

For the CPU a simple i3 is sufficient. It is not even loaded more then 15% during playback.

The previous RTX2060 was taking almost 38ms per frame, and I had to disable some "options". Also it was producing audible fan noise.

The current setup with RTX3060Ti takes UHD source 24 fps with HDR and has total render time of about 22ms and I do not have to compromise on quality.

Buying an even faster GPU makes no sense because if the rendering would be finished in 10ms then the PC would just wait 30ms before the next 41ms frame would come.